From reel to real: Translating movie magic into cybersecurity realities

With the Oscars just around the corner, movie fans everywhere are gearing up to celebrate the best films of the year. But for those of us with a passion for cybersecurity, we can’t help but view these celebrated movies through a unique lens, scrutinizing every detail in high-tech scenes. Somehow, in those Hollywood blockbusters, hacking into even a massive, complex system seems so simple. The hero types furiously and breaks into a top-secret network in seconds.

Of course, in reality, cybercrime is slower-paced and more complex. But we get it — that wouldn’t make for a very exciting movie. That’s why we so often see those over-the-top hacking scenes.

This awards season had us thinking about both the new movies up for awards this year and those classics that have left a lasting impression on us. So we turned to our Lacework Labs team to explore some high-tech scenes from our favorite movies and TV shows and figure out which scenes were realistic. Here’s what they had to say.

Advanced AI: More than just movie magic?

Mission: Impossible – Dead Reckoning, Part One (which was nominated in two Oscar categories this year) is all about “The Entity,” an advanced, self-aware artificial intelligence (AI) program. In the movie, Tom Cruise’s character Ethan Hunt races against time to find this deadly new weapon that could doom humanity.

While the idea of a sentient AI like “The Entity” is a product of Hollywood, the potential for AI to disrupt isn’t pure fiction. AI doesn’t need self-awareness to pose a cybersecurity threat, but it’s not exactly plotting world domination either — at least not outside of the movies.

Consider the infamous paper clip scenario from the book Superintelligence: Paths, Dangers, Strategies. If you haven’t heard of it, the book explores what happens when machines become more intelligent than humans, and the paper clip scenario is a thought experiment showing how AI, lacking any real consciousness, could still cause chaos on a global scale.

“When tasked with maximizing paper clip production, the ‘superintelligence’ keeps increasing the number of paper clips until the entire planet is nothing but paper clips, otherwise known as the second most frightening apocalyptical scenario involving paper clips after having Clippy enabled by default on all Office applications,” Tareq Alkhatib, Security Engineer at Lacework said. (Honestly, it’s a toss-up: a planet overrun by paper clips or enduring Clippy’s endless “assistance,” it’s hard to decide which is the lesser of two evils.)

This scenario is a classic example of the alignment problem, which suggests that, as humans, we often struggle to guide a more advanced intelligence toward our objectives. Superintelligence explains how AI could cause issues even if it’s asked to limit production within a certain geographical space or with limited resources. A seemingly benign task could spiral into a dystopian nightmare.

“A superintelligence asked to ‘protect a network’ can easily decide that the only way to defend a network is to nuke every other network in the world. This super intelligence in this case is not conscious, it is merely acting upon its orders,” Tareq said.

Chris Hall, Cloud Security Researcher at Lacework, brings a unique angle to the discussion of advanced AI, intertwining it with the concept of simulation theory. “Some say there is a high probability that we are currently living in a simulation, which would mean that we are a construct in that simulation — or in other words, AI ourselves. If this is to be believed, then AI has the capacity to be as good or bad as the individual AI. This would bring with it all the things that make us human such as self awareness, morals or lack of morals. Let’s just hope the AI that eventually takes over is a good one!” Chris said.

While we can’t say for sure whether this idea stretches beyond our current AI capabilities (Hey, who’s to say we’re not already living in a simulation?!), it’s a fascinating perspective that prompts us to think deeply about AI’s future path. It raises questions about the ethical implications of AI and the importance of developing detailed guidelines for its growth.

Hacking the CIA: Not as easy as the movies make it seem

Another film that stretches cybersecurity credibility is Jason Bourne. In one scene, former CIA analyst Nicky Parsons nonchalantly hacks into the agency’s servers and downloads classified “Black Ops” files with ease. The CIA team quickly detects the breach but can’t stop her in time.

According to Chris, who worked in the US intelligence community for several years, this depiction is far-fetched. Highly sensitive data would be securely siloed, not conveniently gathered in one “Black Ops” folder. Very few people in the agency would have access to see all of the operations as shown in this scene.

The characters also improbably trace the hack’s origins and access multiple IP addresses within seconds — processes that would actually take months of legal hurdles in reality. Hollywood conveniently bypasses such real-world constraints for dramatic effect.

Cracking the code

In the 1992 movie Sneakers, a group of hackers steal a “black box” device that can crack any encryption code in the world. In one scene, they use it to quickly break into major systems like the US power grid and air traffic control in less than a minute.

But could a device like that realistically exist? Cybersecurity experts say no, at least not with 1990s technology. Today’s intelligence agencies spend years rigorously testing encryption algorithms to hunt for any potential flaws before approving them. Breaking robust modern encryption would likely require a major computing breakthrough like quantum computing.

Although they couldn’t realistically crack complex keys rapidly, accessing those systems is possible without advanced hacking. These systems are more accessible now than portrayed, with industrial controls and more exposed on the open internet.

The search tool Shodan allows finding internet-connected devices, which has enabled real-world hacks by exploiting default passwords and misconfigurations.

“Misconfigurations or forgetting to change default credentials are some of the biggest access points for hackers,” Chris said. While technically unrealistic in Sneakers, it highlights legitimate risks more tangible today than when the film was made.

Keeping it real: Mr. Robot’s hacking authenticity

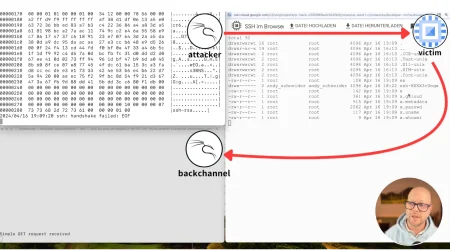

The TV series Mr. Robot, which follows a cybersecurity engineer and hacker named Elliot tasked with taking down corporate giants, stands out for its realistic depictions of hacking and cybersecurity.

In one scene, Elliot hacks into smart TVs, a realistic scenario. “Mr. Robot does a good job of showing different technical tactics in addition to the human social networking part of it,” Chris said. “Hacking smart TVs is definitely doable, in fact, much easier to hack than traditional cable. You could project via chromecast or related screen mirroring software. There are a lot of possibilities when you’re connected to the same local network as the smart TV.”

The scene also shows Elliot obtaining a multi-factor authentication (MFA) code from someone’s phone to then log into their account from his computer — another plausible tactic if you have physical device access.

While MFA prevents many attacks, attackers have recently found loopholes bypassing it after initially authorizing a session. If they access your device and credentials, your MFA offers little protection, as realistically shown.

Anomaly detection helps find the crime

Season 1 of Bodies on Netflix shifts between four different timelines, focusing on one detective in each. The 2053 timeline in this sci-fi crime show shows a future with AI voice detection, anomaly detection, and cybertronic systems — a not-so-distant reality. It centers around Detective Maplewood, and in the first episode, we see an AI system alert Detective Maplewood about an anomaly, specifically, a wounded man on a nearby road. This might sound like something straight out of a sci-fi novel, but guess what? We’re not too far off from this in real life, especially with advancements in anomaly detection, like those developed by Lacework for cloud security.

In Detective Maplewood’s case, she was alerted when something unusual happened nearby while she was working, and that unusual activity was an indication of a crime. That’s how anomaly detection works in the cloud, alerting users when something out of the ordinary is occurring, like a surge in network traffic, a bunch of failed login attempts, and even strange domain names. This is exactly what Lacework does, incorporating AI and machine learning not just to keep an eye out for bad things but to understand what “normal” looks like and help cloud users spot the “not normal” things that could indicate hackers are trying to sneak in (or already have).

Fictional stories; real problems

As we get ready to watch the Oscars this weekend, celebrating the best movies of the year, we’re reminded that the line between Hollywood’s cybersecurity fantasies and our reality isn’t just blurring — it’s a source of vital lessons.

Here’s to enjoying the Oscars and to taking the cybersecurity lessons from our favorite films and series from reel to real life.

Want to put your cyber movie knowledge to the test? Take our Hackademy Awards Quiz for a chance to win a pair of movie tickets to see the Oscar winner of your choice.

Categories

Suggested for you