Why I’m Here

Please Note: This post is an abridged version of the “Interview with Úlfar Erlingsson” interview by the Research Team at Sutter Hill Ventures. View the original post here: Article

Like a lot of people who work in security, I got into it by accident. I was pursuing a PhD in the mid-90s when, suddenly, the Internet happened—that is everybody in the world started using the Internet through a Web browser.

It was immediately clear that the Internet created a ridiculous number of security problems, to the extent that it seemed like this thing was never going to work. Meanwhile, I was working on reliable distributed computing—the foundations of what is today known as the cloud—and all of those security concerns were being raised there as well.

Despite spending my entire career in security, however, I’ve never been interested in the reactive, rules-based approach that has dominated the industry since its inception.

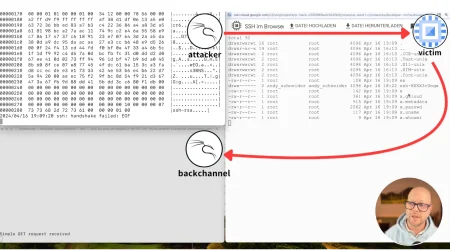

There are two diametrically opposite approaches to secure software activity. One approach is to build rules that recognize bad activity—potential pitfalls—and throw up red flags when an activity that matches those rules is detected. That’s pretty much how the entire security industry has worked since the 1980s. The first antivirus vendors created signature-based antivirus and since then all successful software security companies have taken primarily the same approach.

The opposite approach is to actually understand the customer’s workload and determine what it is supposed to be doing rather than what it shouldn’t be. At first glance, this seems like a much simpler task. The set of activities that a piece of software should perform is much smaller than the (countably) infinite set of activities that it should never perform. This approach is called behavioral anomaly detection and it allows us to enforce security by ensuring that only those things that are supposed to happen are actually happening.

Though simple in concept, behavioral anomaly detection is actually very difficult to achieve in practice. For many reasons, learning what’s normal has been incredibly difficult and has historically been non-scalable. In the enterprise data center world, companies had a bunch of unique software and hardware, all cobbled together, typically over decades. Determining what worked for one company had almost no bearing on what would work for the next, and it was therefore impossible to achieve replicable success.

In the cloud, things are different. Most cloud-native companies are actually quite similar. We can work with them to continually improve what we do and gain better insights into what we’re doing, and we can monitor how well we’re doing. We fail less than the competition, and every single time we do it’s an opportunity for us to shift our vantage point and catch a slew of other similar types of problems in the future. For our competition, it’s instead an endless, Sisyphean task to maintain an exhaustive list of custom rules.

At Lacework, we really have managed to build a top-tier security product based on the approach of accurately understanding what’s normal in our customers’ environment, and reporting deviations from that sense of normality. It is a fundamental shift in how security is done. It’s a fundamental shift that makes us unique—not just in our hands-on approach to cloud security, but also with respect to how we define the value and parameters of security.

The key technology that drives Lacework’s unique value proposition is our Polygraphs. A Polygraph is basically a virtual, abstract view of what is happening in the customer’s cloud.

The concrete details of what’s happening in the cloud involve things like processes launching, people completing their work, and things migrating between one machine or another. There is a lot of flux there. IP addresses are not static. DNS names are not static. Even the processes and jobs are not static. A lot of organizations have moved to microservices, which run maybe for a few seconds or minutes. By contrast, in enterprises, traditionally you might have had infrastructure components like client server computing, where the whole job of the IT support team was trying to make those things run for months at a time without downtime. Now in the cloud, you have an abstraction that maybe is an ephemeral set of microservices—none of which lives more than 30 seconds. But that ephemeral set actually is a component, and it’s an essential part of what the customer is doing.

That ephemeral set of services also has particular behaviors. Even though it lives for a short time, it is getting its data from somewhere, it is answering questions that are coming from somewhere. Figuring out that a particular ephemeral cloud of activities is actually one component and then doing the same thing for the components that component talks to (which might also be virtual and ephemeral), then figuring out what the right behavior is for those things, allows us to generate our Polygraph.

So, when one of those processes that all of a sudden opens a TFTP port, or starts reading data from a very different S3 bucket—things that are typical of bad behavior—we can spot it. That’s what the Polygraph does. It builds up these abstractions, these virtual abstract entities, which represent many activities, processes and machines, including machines that come and go due to elastic scaling. The Polygraph recognizes this as a single abstraction, and ties it to other abstractions, forming a graph that captures how abstractions should be communicating with, depending on, and interacting with each other. That’s our core technology.

The reason I joined Lacework is because I’ve tried to pursue the anomaly-detection approach for well over a decade. And it’s extremely hard. It’s an amazing accomplishment that Lacework has managed to make it succeed. So, I’m here because I was amazed and I wanted to be part of the fun of fundamentally changing and improving how software security gets done in the cloud.

Categories

Suggested for you