ESG: 4 reasons why SecOps is still pretty difficult

Cybersecurity has never been for the faint of heart. Malicious cyber activity chases the sun; as hackers sleep on one side of the world, others are at work, trying to steal sensitive data for profit. And on the front lines are cybersecurity teams, often working at all hours of the day to eliminate risks and investigate potential breaches.

Then the cloud arrived. Cybersecurity became infinitely more difficult. Anyone within an internet connection could access sensitive data from anywhere in the world. New attacker techniques — or dangerous evolutions of legacy cyberattacks — flooded the zone. And shrinking this growing attack surface quickly became a tall order.

Over the years, new cloud security tools have helped teams rein in the chaos. But, even so, many security teams still seem at a loss. In fact, according to a recent Enterprise Strategy Group (ESG) survey on cloud detection and response (CDR), 51% of respondents indicated that SecOps is either as complicated or more complicated than it was 24 months ago.

Let’s dig into the most frequently cited reasons SecOps is struggling.

No automation for complex tasks

The scalability of the cloud is its superpower. But, without the proper tools and processes, scalability can also be its demise. Why? Because complex cybersecurity tools and processes require a heavy dose of manual lift, which can make SecOps teams feel like they’re always catching up from behind. And the research bears this out: with 32% of respondents choosing this pain point, this earns the top spot as the most cited contributor to SecOps difficulty.

Cybercriminals utilize advanced techniques to execute attacks with speed. Sophisticated social engineering, spear phishing, ransomware, and zero-day exploits make it harder for security teams to detect and respond to threats using manual processes.

The sheer volume of daily alerts exhausts staff. A recent study reported security teams receive 500 or more per day, fueled by multiple disparate security tools. Analyzing and triaging alerts and quickly knowing what alerts to act upon is not scalable using a manual approach.

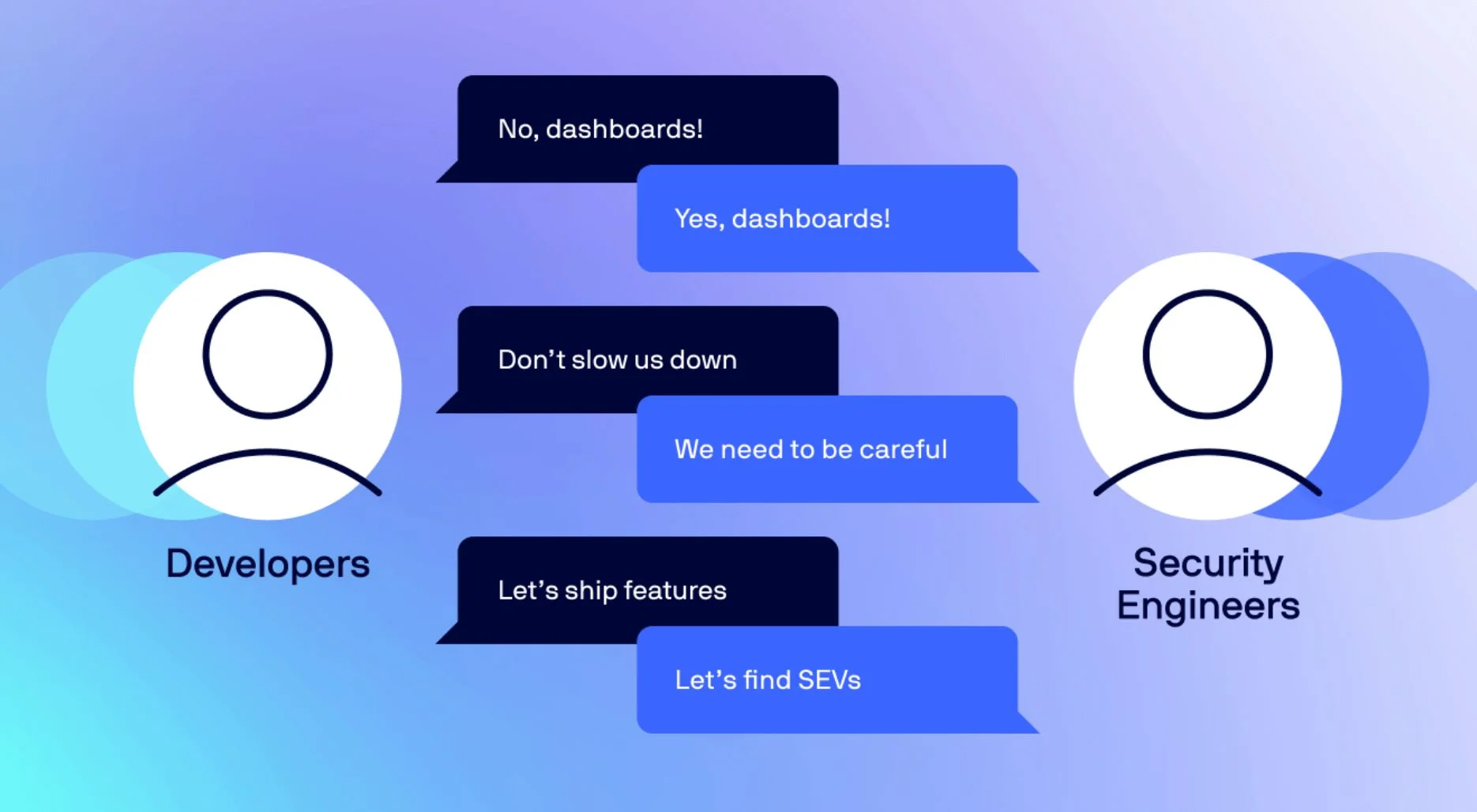

Without automation to help analyze the volume of data and prioritize mitigation efforts, security teams give developers lists of vulnerability fixes, with little context on what matters most. Friction intensifies between IT and security teams and ultimately derails innovation. And the shortage of skilled cybersecurity professionals only exacerbates the problem.

The attack surface has grown and is constantly changing

The second-most cited reason for SecOps difficulty is a changing and growing attack surface (chosen by 30% of respondents). This shouldn’t come as a surprise to anyone familiar with the pains of modern cloud computing. If you ask any security leader about their foremost challenge in securing cloud environments, their answer will likely include something about a lack of visibility.

What’s out there? Where is my data? What is easily accessible? Am I at risk?

Cloud infrastructure and systems are constantly changing and expanding. A myriad of devices have access to cloud environments at all times. From medical devices that monitor health and wellness, to retail and manufacturing devices for point of sale and inventory management, and even transit systems for real-time routing, connections are everywhere creating potential opportunities to exploit. Then, when you add in the relatively low barrier to entry in spinning up cloud environments, it’s easy to understand why SecOps teams feel overwhelmed. Securing a moving target that is constantly morphing is anything but easy.

But perhaps the biggest risk comes not from the technology, but from the people who use it. According to Statista, the number of internet users worldwide stood at 5.18 billion in 2023. Translation: two thirds of the global population is now connected.

People, unlike machines, are human. Whether it’s trusting a third party supply chain or believing your employees won’t bypass any security measures, hiccups happen even with good intentions. If an employee downloads an unauthorized application, falls prey to a phishing email or social engineering attack, or makes a simple cloud misconfiguration mistake, breaches can happen.

More security tools could actually mean more risk

In the ESG report, 28% of respondents indicated that security gaps caused by disparate tools and processes were a major source of SecOps pain. Recent studies indicate that, on average, organizations employ 30 or more security tools to protect their data.

It’s easy to understand how we got here. Bad actors find new ways to exploit new technologies at an extremely fast rate. As new attack vectors surface, the market is flooded with solutions that solve this one problem. Before you know it, your company has a double-digit tech stack of loosely integrated security tools.

But during incident investigation, SecOps teams are forced to manually piece together insights across these multiple interfaces. And, as the ESG survey data suggests, even with multiple solutions in place, organizations can’t easily detect or respond to threats or attacks in time to prevent incidents or efficiently respond to mitigate their impact. At what point do these “solutions” actually become problems?

To limit gaps, organizations need an agreed upon security strategy that is supported by all stakeholders — IT, development, operations, and security. Without aligning on tools and processes that share information, fire-fighting and burnout will remain. With proper security monitoring, SecOps teams can prioritize risk, communicate effectively across teams, and gain control over cloud environments.

No time for rules

Another component of legacy threat detection that doesn’t scale? Rules.

According to the ESG report, 26% of respondents cited difficulty developing security rules in a timely manner as a source of SecOps frustration. Reliance on rules and signatures may have worked traditionally; however, to be effective in the cloud, threat rules need to be constantly tweaked and maintained.

Rule creation and maintenance requires immense amounts of time, which is a luxury that bandwidth-deprived security teams don’t have. Rules are also very limited, especially when dealing with an ever-evolving attack surface. Rules-based detection tools work by monitoring for known exploits or looking for activity patterns indicative of known attacks. However, they fail to identify evolutionary threats now targeting cloud infrastructure and applications: cloud ransomware, malicious cryptomining, and others.

Even if security teams had ample time to maintain rules (which they don’t), leaders need to ask themselves if this is an effective use of time, given the limitations of tracking threats with rules and signatures — especially if more scalable methods exist, like anomaly-based threat detection.

What does success look like?

Thankfully, all of these issues can be solved; the Lacework Polygraph® Data Platform was tailor-made to address each of these pains. With Lacework, teams can consolidate tools, eliminate gaps, scale security operations up or down with ease, and even eliminate the need for rules.

Interested in hearing more? Read this solution brief to learn how Lacework can specifically address each of the issues mentioned in this post.

And access the ESG report — Cloud Detection and Response: Market Growth as an Enterprise Response — for full results from the research.

Suggested for you